In the ever-evolving realm of digital marketing, understanding how your website is perceived by search engines is crucial. Enter Googlebot simulators — powerful tools designed to replicate the way Google’s web crawler navigates and indexes your site. These simulators provide invaluable insights, enabling webmasters and SEO experts to pinpoint issues that might hinder search engine performance. Today, we explore the top five Googlebot simulators that stand out due to their efficiency, accuracy, and user-friendly interfaces. Whether you’re refining your SEO strategy or diagnosing website problems, these tools are indispensable for achieving optimal visibility and rankings.

Exploring the Best Googlebot Simulators to Optimize Your Website

Understanding Googlebot Simulators

Googlebot simulators are essential tools that allow developers and SEO specialists to emulate how Google’s web crawling bot, or Googlebot, perceives a website. They help in identifying potential issues which might affect a site’s ranking and provide insights into how well search engines can understand webpage content. By using these simulators, you can enhance your site’s SEO and ensure that it performs well in search engine rankings.

The Importance of Emulating Googlebot Behavior

Emulating Googlebot behavior is crucial for diagnosing SEO-related issues. It helps determine if content is blocked, how JavaScript is processed, and identifies crawlability issues. With search engines relying heavily on how websites are served to their crawlers, gaining insights into what they see is imperative. It allows webmasters to adjust and conform their webpages according to the criteria that impact search engine visibility and performance.

Features to Look for in a Googlebot Simulator

When evaluating different Googlebot simulators, several key features should be considered. Firstly, look for simulators that provide real-time analysis and have capabilities to simulate various types of Googlebots (like mobile or desktop). Also, assess their ability to highlight errors and warnings, simulate JavaScript rendering, and offer comprehensive reporting tools. Built-in tools for previewing and comparing before-and-after results are advantageous for making informed modifications.

Top Googlebot Simulators You Can Use

1. Screaming Frog SEO Spider: Known for its extensive features, it offers an integrated Googlebot simulation that can mimic how search engines crawl your site, with a particular focus on JavaScript-heavy content. 2. Sitebulb: Provides enriched visual data and crawler simulations that help in understanding website performance. It’s particularly effective for analyzing mobile responsiveness and UX. 3. Google Search Console’s URL Inspection Tool: While not a standalone simulator, this tool gives direct insights from Google regarding how URLs are crawled, providing diagnostic reports and improvement suggestions. 4. DeepCrawl: Offers robust crawling with an emphasis on error identification and structural analysis, making it easier to spot issues that Googlebot might encounter. 5. Botify: This tool not only simulates Googlebot actions but also emphasizes the interaction between bots and structured data, providing a comprehensive overview of potential issues and opportunities.

Comparison of Key Features in Leading Googlebot Simulators

| Simulator | Googlebot Emulation | JavaScript Rendering | Error Reporting | SEO Analytics |

|---|---|---|---|---|

| Screaming Frog SEO Spider | Yes | Yes | Comprehensive | In-depth |

| Sitebulb | Yes | Partial | Detailed | Visual Reports |

| Google Search Console’s URL Inspection Tool | Limited | No | Detailed | Direct from Google |

| DeepCrawl | Yes | Yes | Detailed | Actionable Insights |

| Botify | Yes | Yes | Comprehensive | Strategic Data |

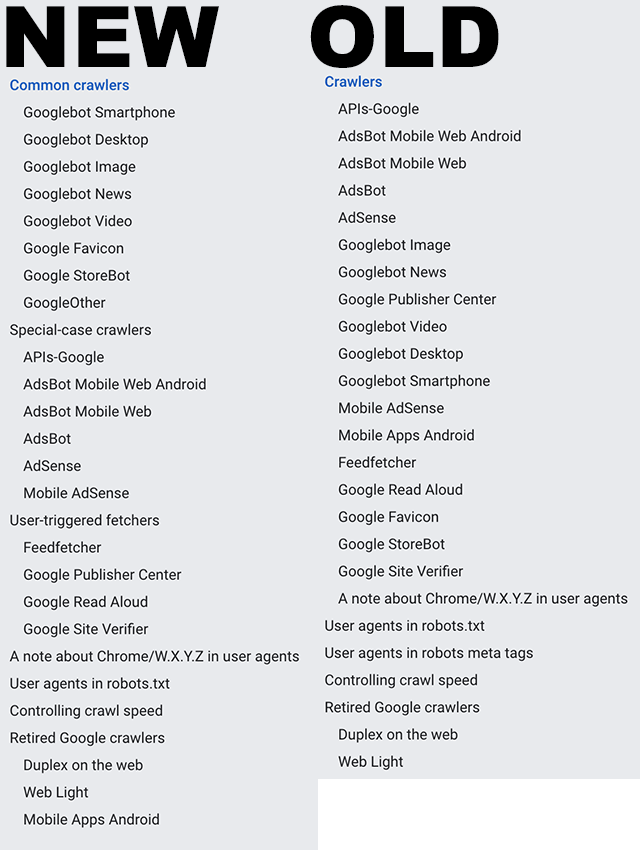

What are the names of Google bots?

Google deploys several automated programs, or bots, that perform various tasks across the internet. Below are the names and some details about these bots.

Commonly Known Google Bots

Various bots are utilized by Google for specific tasks. While many people might only be familiar with Googlebot, there are actually several more:

- Googlebot: The main web crawling bot used by Google to gather information and index webpages. It helps ensure that the Google search engine is up-to-date.

- Googlebot-Image: Dedicated to crawling and indexing images across the web. It ensures that Google’s image search results are comprehensive and current.

- Googlebot-News: Specifically designed to gather news-related content from various websites, ensuring that news search results are rich and recent.

Specialized Google Bots for Specific Tasks

Google employs specialized bots for distinct tasks which go beyond mere webpage indexing. Here are a few:

- AdsBot: Works to review and monitor Google Ads. It evaluates landing pages to ensure compliance with Google’s advertisement standards.

- Mobile AdsBot: Similar to AdsBot, but focuses on assessing ads in mobile formats. It ensures that ads displayed on mobile devices follow Google’s policies.

- Google-Structured Data Testing Tool: This bot checks the structured data markup on web pages to ensure that rich snippets and other Google features are properly implemented.

Lesser-Known Google Bots

Beyond the main bots, Google also uses lesser-known bots for specific functionalities. These bots might not be as prominent but are crucial for certain Google services:

- Google-Read-Aloud: This bot powers Google’s read-aloud feature, allowing users to listen to web content rather than read it.

- Google-SafetyBot: Monitors for unsafe content, such as malware or phishing threats, helping to secure Google’s users.

- VideoIndexingBot: This bot focuses on crawling and indexing video content, ensuring that Google provides accurate search results for video queries.

How to emulate Googlebot user agent?

What is Googlebot User Agent?

Googlebot is a web crawling bot or agent used by Google to gather and index the content of web pages. The User Agent string identifies Googlebot to the servers of the websites it crawls, allowing these sites to deliver the appropriate content and ensure compatibility with Google’s search engine indexing protocols.

- Web Crawling and Indexing: Googlebot visits billions of pages across the web, collecting content to keep Google’s search index updated.

- Identification: By identifying itself as Googlebot in the User Agent string, web servers can distinguish its requests from other bots and standard users.

- Access and Performance Optimization: Websites can customize content delivery and server performance based on the User Agent to optimize the indexing process.

How to Emulate Googlebot User Agent in Your Browser

For testing purposes, developers might want to emulate Googlebot’s User Agent in web browsers to see how a website appears when visited by Googlebot. Here’s how you can do this in Google Chrome:

- Open Developer Tools: Launch Google Chrome and navigate to the website you want to test. Open Developer Tools by pressing `Ctrl+Shift+I` (or `Cmd+Option+I` on Mac).

- Toggle Device Toolbar: In Developer Tools, click on the Toggle Device Toolbar button or press `Ctrl+Shift+M` (or `Cmd+Shift+M` on Mac) to enable device simulation.

- Set User Agent String: Click on the three vertical dots in the Developer Tools pane, select More tools > Network conditions. Under User agent, uncheck Use browser default and choose Custom…. Input the Googlebot User Agent string:

Mozilla/5.0 (compatible; Googlebot/2.1; +http://www.google.com/bot.html).

Why Emulating Googlebot Can be Useful

Emulating Googlebot can provide insights into how Google perceives a site, aiding in SEO improvements. This process helps developers and SEO professionals to ensure that Googlebot is correctly accessing and rendering a site’s content.

- Improving SEO: Observing how Googlebot interacts with your site can help identify any issues that may impact your site’s search ranking.

- Diagnosing Rendering Issues: Ensuring that all scripts and styles are properly loaded when visited by Googlebot can prevent rendering issues that might affect indexing and visibility.

- Testing Blocking Rules: By emulating Googlebot, you can verify that robots.txt rules are correctly allowing or disallowing access to certain pages.

Frequently Asked Questions

What is a Googlebot Simulator and why do you need one?

A Googlebot Simulator is a tool designed to replicate the behavior of Google’s web-crawling bot, allowing you to see how your website might appear to Google’s search engine crawlers. These simulators mimic the way Googlebot navigates your website, interpreting and parsing content, assessing load speed, and following links. Understanding this process is crucial because it helps you identify elements of your website that might affect your search engine optimization (SEO) efforts. By analyzing how a Googlebot views your site, you can make informed changes to improve your site’s ranking potential and fix any issues that may prevent it from being indexed properly.

How can Googlebot Simulators improve your website’s SEO?

Using a Googlebot Simulator allows you to identify pages that may be preventing Google from accessing your content efficiently. Simulators highlight technical issues such as broken links, slow-loading pages, and misconfigured robots.txt files, which might block Googlebot inadvertently. By identifying these problems, you can rectify them to ensure that search engine crawlers access and index your content smoothly. Understanding the crawler’s journey through your site gives valuable insights into optimizing your site architecture, resulting in improved crawl efficiency and ultimately boosting your site’s visibility in search results.

What are some of the top Googlebot Simulators available today?

There are numerous Googlebot Simulators, each offering unique features to analyze your site’s interaction with search engine bots. Screaming Frog SEO Spider is a popular choice, offering a desktop program that crawls websites like a search engine bot and highlights issues that need fixing. Another tool, DeepCrawl, provides a cloud-based service that, aside from simulating bots, offers in-depth analysis on every aspect of your site. Sitebulb is another robust option known for its extensive visualization of crawl data. Each of these tools provides a different lens through which to scrutinize your site, allowing for comprehensive SEO analysis.

Are there any limitations to using Googlebot Simulators?

While Googlebot Simulators are incredibly useful, they do have some limitations. These tools may not perfectly capture the behavior of the actual Googlebot due to the complexity of Google’s proprietary algorithms and frequent updates. Furthermore, simulators might not handle dynamic content or JavaScript-heavy sites as efficiently as Google’s real crawler, potentially leading to incomplete insights. It’s essential to use simulators as part of a broader SEO strategy, supplementing their use with actual performance data and analytics to ensure a well-rounded understanding of your website’s performance and visibility.